The core of the callgent architecture, is to encapsulate every service and user behind a callgent.

Then build various entries into third-party platforms, so that any users and services are naturally connected anywhere in their native ways: webpages, chatting, email, APIs..

Above this, we add a layer of semantic invocations, empowered by large language models (LLMs), to make the callgent able to understand and react to any user/system's intents.

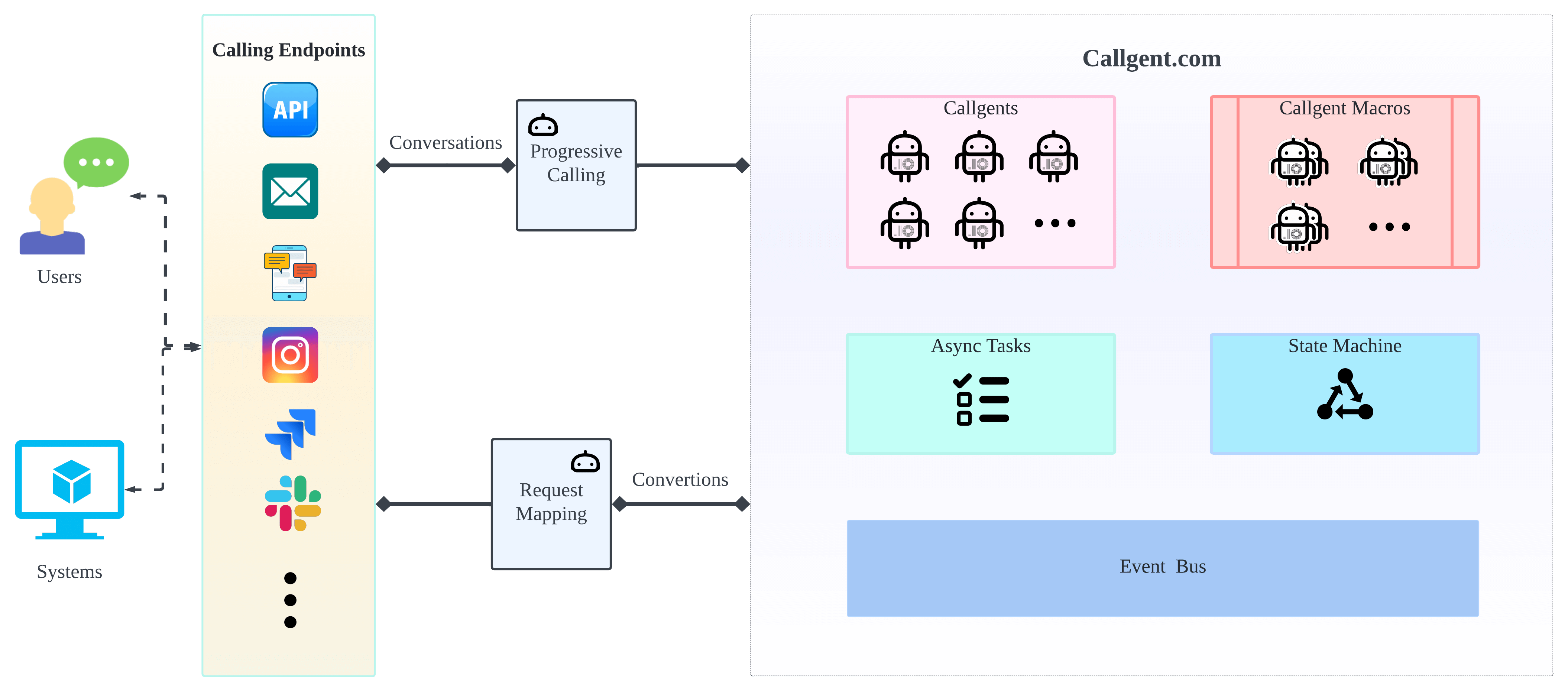

System components and processing flow are shown in the following diagram:

Each system service, and every user is encapsulated into a Callgent.

Requesting a callgent is done through various Client Entries, integrated into third-party platforms. So systems and users are naturally connected anywhere in their native ways: Webpages, chatting, email, APIs..

Under this perspective, a user or group behind the callgent is no different than any normal services, system can invoke users just like an async API.

At the same time, the system service behind the callgent is also exposed as a user, everyone can chat with it in natural language.

Each invocation is an asynchronous task, maintained by callgent intelligently:

callgentis able to understand and react to any user/system's intents.callgentis responsible for collecting enough information to fulfill the task, progressively.callgentautomatically maps and converts task data into system invocation parameters, or user conversations.

Put all users and services onto the same platform, we can orchestrate them in a seamless way.